“Then just LLL to get the flag”

– Every CTF player

What is a lattice?

Less formally, a lattice is a set of vectors which are all integer linear combinations of a set of basis vectors. In mathematics these can live in all of , but in practice (since computers aren’t magic) we usually restrict ourselves to , or sometimes . We define for a basis where the lattice as

A crucial point about lattices is that they are not at all uniquely defined by their basis. If they were normal vector spaces this is fairly obvious, for example has an uncountably infinite number of bases.

The process of lattice reduction is that of trying to find a new basis for a given lattice which has nice properties or reveal something important about the structure of the lattice. Specifically they try to find a basis in which the vectors are short and roughly orthogonal to each other. Below is a visualization of lattice reduction in , try dragging around the starting basis and see how the reduced basis changes.

Starting Basis

Reduced Basis

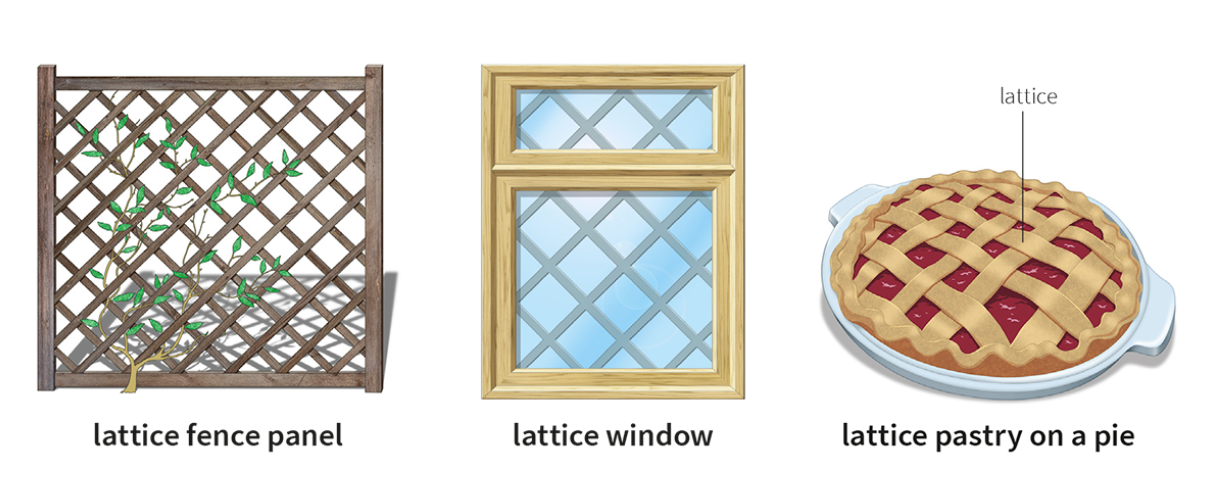

From this visual it should also be evident why we call them “lattices”

(Image from Oxford Learner’s Dictionaries)

Setting up equations

If you have taken a linear algebra class you are familiar with the concept of representing a system of equations as a matrix. We can for example represent the system

like this

where each row represents one of our equations. One can view this as finding a linear combination of the basis vectors which equal the target vector .

When reducing lattices we don’t try to solve an equality, we instead try to simultaneously minimize a set of expressions. More specifically we try to find (integer) linear combinations of the given basis vectors which are small in norm (length).

Extended Extended Euclidean Algorithm

The extended euclidean algorithm is an algorithm which let’s you, given , find such that .

Let us try and solve this problem using lattice reduction, but for numbers . I.e find such that .

An important fact which makes this relevant to lattice reduction is the fact that is the smallest possible non-zero number you can get by integer linear combinations of . With that in mind let’s consider the follow lattice

Due to unexplainable convention, when denoting a lattice basis as a matrix we let each row represent a basis vector, not column. Therefore this is a lattice with basis vectors in . If we reduce this lattice it will find a basis for the same lattice but with shorter vectors. We know that is the shortest possible vector in this case, so we should expect it to be in the basis.

1sage: a, b, c = [7*random_prime(2**64) for _ in range(3)]

2sage: B = matrix([[a], [b], [c]]); B

3[81605617467706236653]

4[79792519733247444991]

5[48688238757238887163]

6sage: B.LLL()

7[ 0]

8[ 0]

9[-7]As you can see we found not only that , but also that no other vectors are needed to represent the lattice (the other vectors are ). This means that any linear combination of will be a multiple of , which I hope makes sense.

We did technically find and not , but they are completely equivalent in the eyes of the lattice reduction algorithm, since they have the exact same absolute size; there is no distinction between positive and negative numbers.

Extracting the coefficients

Computing was not our goal, we want to find the specific integers such that . Therefore let’s consider the following lattice basis

If this lattice is reduced such that appears in the first column as before, then that means that it had to have gotten there by some linear combination of the rows, and this linear combination will be “captured” by the last three columns such that we can extract it:

Let’s try this in practice

1sage: B = matrix([[a, 1, 0, 0],

2....: [b, 0, 1, 0],

3....: [c, 0, 0, 1]])

4sage: B

5[81605617467706236653 1 0 0]

6[79792519733247444991 0 1 0]

7[48688238757238887163 0 0 1]

8sage: B.LLL()

9[ 654941 -3003666 1473648 2619317]

10[-3600737 -138931 1896467 -2875157]

11[-5740623 515249 -1883016 2222372]Not exactly what we wanted, we no longer get in the first column. This comes back to the fact that the lattice reduction algorithm has no preference for which columns it wants to minimize, it tries to minimize the total size of the vector, and all entries in it will contribute to that. The basis therefore looks “smeared out”; the size of the first column has spread into the others to make everything as even as possible, while still being a basis for the same lattice.

Now we introduce the concept of weighting. Consider the following lattice basis

Where is some sufficiently large number. The point is to make the size of (the value in the first columnn) matter a lot more in the calculation of the final size, since it will be multiplied by the large number . It will thus try to minimize the first column as much as possible, the other columns will be minimized as well but only as a second priority, to anthropomorphize the algorithm a bit.

1sage: W = 10**10

2sage: B = matrix([[W*a, 1, 0, 0],

3....: [W*b, 0, 1, 0],

4....: [W*c, 0, 0, 1]])

5sage: B

6[816056174677062366530000000000 1 0 0]

7[797925197332474449910000000000 0 1 0]

8[486882387572388871630000000000 0 0 1]

9sage: B.LLL()

10[ 0 -125321082 2302873871 -3564006605]

11[ 0 3187908539 -3079199380 -296871849]

12[-70000000000 -817817861 -500025365 2190196607]This time we get in the first column, exactly like we wanted, and in the last three columns we have the coefficients we were looking for. Let’s negate them (to get and not ) and we can then verify that

We not only found a solution, we found a small solution since the lattice reduction algorithm still tried to minimize the non-weighted columns.

Finding large solutions

If you are not interested in the size of the coefficients you shouldn’t use LLL can instead look at the transformation matrix such that where is the reduced basis:

1sage: B = matrix([[a], [b], [c]]); B

2[81605617467706236653]

3[79792519733247444991]

4[48688238757238887163]

5sage: Bprim, U = B.LLL(transformation=True)

6sage: Bprim

7[ 0]

8[ 0]

9[-7]

10sage: U

11[ -11398931390463920713 11657945352529462379 0]

12[ 2761828907117753380633971705189402507 -2824584986900649619836477317376005974 1]

13[ 397073355769105623 -406095915830696686 0]The last row of tells you what linear combination of the rows of resulted in the last column of . In this case it tells us that

This is obviously a much larger solution, and it doesn’t even try to use to spread the coefficients out at all, but in some cases this is all you need.

Dealing with constant terms

Now let’s try to find small solutions to for some . We might start by considering a lattice basis like this

A vector of this lattice will be of the form

We want to solve . So finding a vector in the lattice where the first entry and will mean that we have a solution to our equation sitting in the middle three columns.

1sage: a, b, c, d = [random_prime(2**64) for _ in range(4)]

2sage: B = matrix([[a, 1, 0, 0, 0],

3....: [b, 0, 1, 0, 0],

4....: [c, 0, 0, 1, 0],

5....: [-d, 0, 0, 0, 1]])

6sage: B.LLL()

7[-17862 -12131 31485 -19701 -3295]

8[ 17571 -16241 -3688 4830 -49164]

9[ 14119 -54046 32184 54497 3230]

10[109566 1184 28568 -28433 15369]This doesn’t work as-is of course. Let’s start by once again weighting the “equation” column.

1sage: W = 10**10

2sage: B = matrix([[W*a, 1, 0, 0, 0],

3....: [W*b, 0, 1, 0, 0],

4....: [W*c, 0, 0, 1, 0],

5....: [W*-d,0, 0, 0, 1]])

6sage: B.LLL()

7[ 0 769935 -704630 -248469 361208]

8[ 0 -301057 -832236 1701572 305307]

9[ 0 -139249 3658597 1018036 9450478]

10[-10000000000 184827 -421367 -614860 -1465795]We are now interested in the rows where the first entry is , of which there are , but sadly none of them have . To fix this we want to also punish vectors where the last entry is large, we do that by multiplying the last column by as well.

1sage: B = matrix([[W*a, 1, 0, 0, 0],

2....: [W*b, 0, 1, 0, 0],

3....: [W*c, 0, 0, 1, 0],

4....: [W*-d,0, 0, 0, W]])

5sage: B.LLL()

6[ 0 -842560731 2260007771 -1330596802 0]

7[ 0 -4768843298 1718011009 6023152595 0]

8[-10000000000 1648147427 -1439660089 -1046238807 0]

9[ 0 1090481115 -1275699103 -296675661 10000000000]This time we found a solution where and , and indeed

1sage: 1090481115*a - 1275699103*b - 296675661*c == d

2TrueAn important thing to note is why the last column is not just fully since it has such a large weight. This is because it still needs to be a basis for the same lattice, if all of the last column was then we would not have the same span as the original lattice, as it did not.

Weight matrices

In more complex systems you might have several different weights for different equations and variables. It can be handy to separate the weighting from the actual lattice basis, which also makes it easier to analyze the results. If we apply this for the previous example we would have

Each entry on the diagonal of denotes how much to scale each column of by, so will equal the basis we constructed previously. In code we can therefore solve the problem like this

1sage: W = diagonal_matrix([10**10, 1, 1, 1, 10**10])

2sage: B = matrix([[a, 1, 0, 0, 0],

3....: [b, 0, 1, 0, 0],

4....: [c, 0, 0, 1, 0],

5....: [-d, 0, 0, 0, 1]])

6sage: Bprim = (B*W).LLL()/W

7[ 0 -842560731 2260007771 -1330596802 0]

8[ 0 -4768843298 1718011009 6023152595 0]

9[ -1 1648147427 -1439660089 -1046238807 0]

10[ 0 1090481115 -1275699103 -296675661 1]

11sage: v = next(v for v in Bprim if v[0]==0 and abs(v[-1])==1)

12sage: v *= sign(v[-1])

13sage: v

14(0, 1090481115, -1275699103, -296675661, 1)

15sage: v[1:-1] * vector([a, b, c]) == d

16TrueWe apply the weighting, do the LLL reduction, and then remove it to more clearly see what the value of our actual equations are.

Matrix construction tips

Constructing the actual lattice bases can be quite fiddly, here are some nice Sage functions which can help you out.

When constructing lattices like the one above it is nice to use a combination of

.augment() and

identity_matrix(n)

1B = matrix([a, b, c, d]).T.augment(identity_matrix(4))More complex matrices are nice to construct using

block_matrix. It automatically expands scalars into multiples of the appropriate identity matrix. So we can for example construct

Like this

1B = block_matrix([

2 [A, 1],

3 [p, 0]

4])When finding small vectors in the span of you might construct something like

1B = A.change_ring(ZZ).stack(p*identity_matrix(A.ncols()))This will work for the most part, but tools like flatter dislike having lattice bases with more rows than columns. This can be solved like this

1B = p*identity_matrix(ZZ, A.ncols())

2B.set_block(0, 0, A.rref())Solving systems of equations

It is now not a very large leap of faith to consider systems of linear equations which we want to find small solutions to. Instead of having a single “equation column” we have several, all of which are given a large weight compared to the coefficients in order to prioritize minimizing them. See the following code example for how you can do this

1sage: A = random_matrix(ZZ, 2, 5, x=2**16)

2sage: tgt = A*random_vector(5, x=2**16)

3sage: L = block_matrix([

4....: [A.T, 1, 0],

5....: [matrix(-tgt), 0, 1]])

6sage: L

7[ 30863 56115| 1 0 0 0 0| 0]

8[ 37085 54884| 0 1 0 0 0| 0]

9[ 29462 2928| 0 0 1 0 0| 0]

10[ 11696 3725| 0 0 0 1 0| 0]

11[ 51778 37510| 0 0 0 0 1| 0]

12[-----------------------+----------+--]

13[-3753677194 -4314585469| 0 0 0 0 0| 1]

14sage: W = diagonal_matrix([2**16]*A.nrows() + [1]*A.ncols() + [2**16])

15sage: Lprim = (L*W).LLL()/W

16sage: Lprim # the last row contains the solution here

17[ 0 0 207 -25 435 -363 -271 0]

18[ 0 0 -1106 1624 428 60 -761 0]

19[ 0 0 1018 -688 299 2018 -740 0]

20[ 0 1 -331 701 269 740 -625 0]

21[ 1 0 -505 556 102 -705 4 0]

22[ 0 0 28479 29591 9315 4976 27902 1]

23sage: v = next(v for v in Lprim if v[:2].is_zero() and abs(v[-1]) == 1)

24sage: v *= sign(v[-1]) # in case the last entry is negative

25sage: v

26(0, 0, 28479, 29591, 9315, 4976, 27902, 1)

27sage: A * v[2:-1] == tgt

28TrueSolving modular equations

Let’s say we want to find small solutions to the following system of equations

This can be rewritten as a normal integer equation

For some , which we importantly dont require to be small. For this problem you would construct a lattice like this

with the weight matrix

Since there are no other coefficients on the rows containing the moduli and there is no incurred “cost” in using them as much as possible. So they will be added/subtracted from the result vector as many times as is needed to bring the first column into the range and the second into , which is the smallest you can get them.